Recently, I decided to move my blog’s images off of GitHub. I enjoy sharing photos from my travels, and my uploads folder was close to hitting GitHub’s repo limit of 1 GB. Since I had some free time over the holidays, I challenged myself to come up with an easy way to upload images to Google Cloud Storage using the Python SDK. Please keep in mind I only started learning Python two months ago, so the code shared in this post isn’t perfect, but it works.

The following dependencies are required for this tutorial.

import datetime

import os

import pyperclip

import re

import shutil

import sys

from google.cloud import storageThe first step was writing a function to create the desired file path for the image. On my blog, I use /uploads/year/month/image.jpg for the file path format. This function is very simple, it stores the current year and month in variables, creates the file path, and returns the file path.

def create_file_path():

year = datetime.datetime.now().strftime("%Y")

month = datetime.datetime.now().strftime("%m")

file_path = f"/uploads/{year}/{month}"

return file_pathThe next part of the code is actually a function called upload_to_dropbox(). I store my local BrianLi.com repo in my Dropbox folder. This makes it easy to keep a secondary backup of my site in case something happens to my computer. Furthermore, copying my images to Dropbox allows me to keep a backup of my images in case something happens to Google Cloud Storage.

This function uses regex to extract the file name from the file path. Next, it creates another destination path to my Dropbox folder. Notice how upload_to_dropbox() calls create_file_path() in order to build the /uploads/year/month portion of the file path. Finally, the last line of this function copies the image to the destination path.

def upload_to_dropbox(file):

filename = re.sub(r"\/.*\/(.*\.\w{1,4}",r'\1',file)

destination_path = f"/Users/brianli/Dropbox/brianli.com/static{create_file_path()}/{filename}"

shutil.copy(file, destination_path)Next up is upload_to_gcs(), which is the function that does the actual uploading. You’ll need to replace /file/path/to/gcloud.json with the file path of the JSON file containing your Google Cloud credentials, and bucket-name with the name of your Google Cloud Storage bucket. Since this function’s use case is to upload publicly viewable images to Google Cloud Storage, I used blob.make_public() to set the permissions. After a successful upload, the function will print the public URL of the uploaded asset.

def upload_to_gcs(file):

os.environ["GOOGLE_APPLICATION_CREDENTIALS"]="/file/path/to/gcloud.json" #Set gloud credentials.

client = storage.Client() #Set gcloud client.

bucket = client.get_bucket("bucket-name") #Specify gcloud bucket.

filename = re.sub(r"\/.*\/(.*\.\w{1,4}",r'\1',file)

blob = bucket.blob(f"{create_file_path()[1:]}/{filename}") #Set filename format (uploads/year/month/filename).

blob.upload_from_filename(file)

blob.make_public()

url = blob.public_url

print(f"Image URL - {url}")Since I am using this function to upload images for my blog, I wrote another function to generate the correct Hugo shortcode syntax, and copy it to the clipboard for easy copy and paste functionality.

def copy_img_shortcode(file):

filename = re.sub(r"\/.*\/(.*\.\w{1,4}",r'\1',file)

destination_path = f"{create_file_path()}/{filename}"

img_shortcode = f"{{{{< img src=\"{destination_path[1:]}\" alt=\"\" >}}}}"

pyperclip.copy(img_shortcode)Finally, the code below executes the functions above in the correct order. The file = sys.argv[1] line assigns an argument at runtime to the file variable. For example, running python3 /Users/brianli/Desktop/image.jpg would pass the /Users/brianli/Desktop/image.jpg into the Python script.

if __name__ == '__main__':

file = sys.argv[1]

upload_to_dropbox(file)

upload_to_gcs(file)

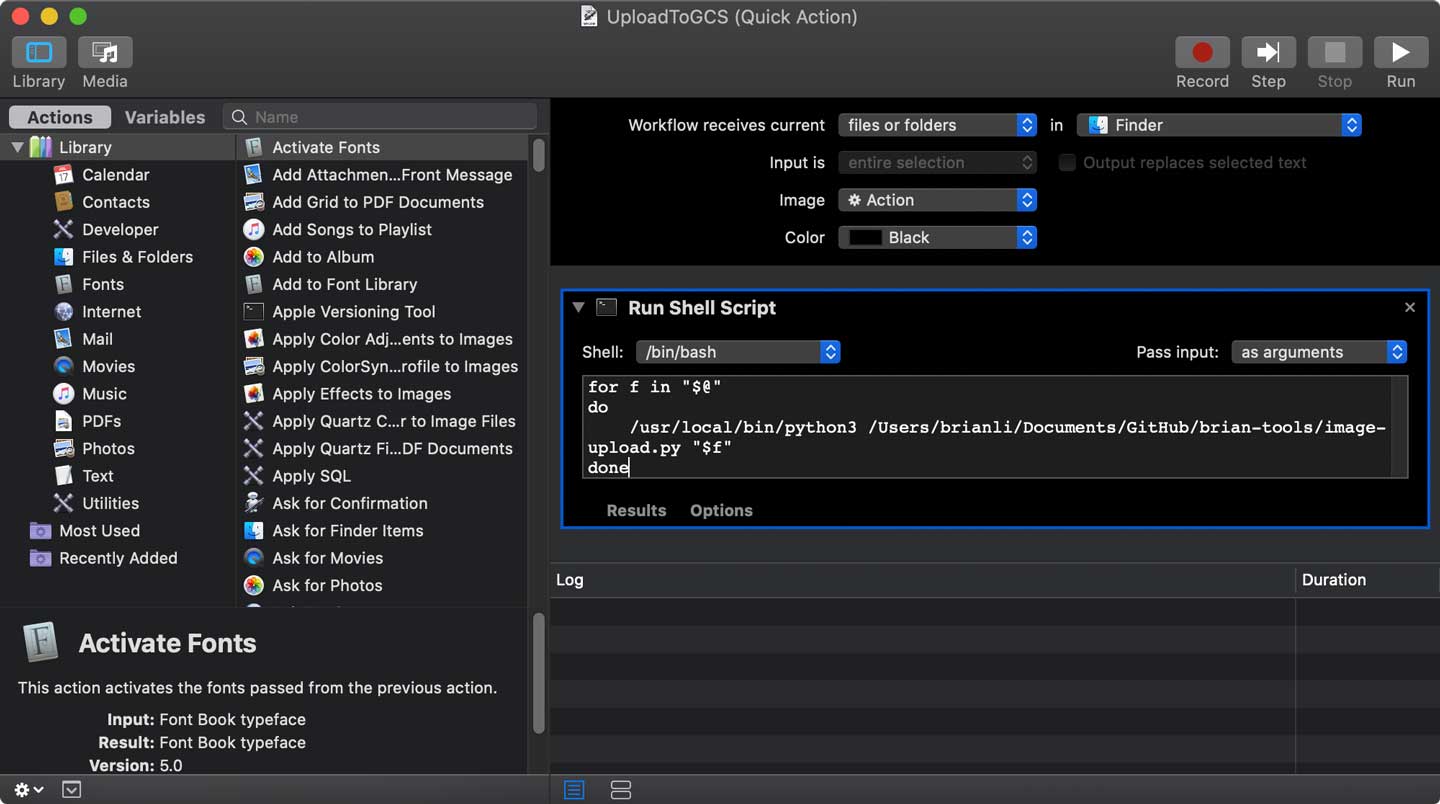

copy_img_shortcode(file)Using file = sys.argv[1] also makes it easy to integrate this Python script into an Automator action in macOS. The Bash script below can be used with Automator’s “Run Shell Script” option.

for f in "$@"

do

/usr/local/bin/python3 /path/to/image-upload.py "$f"

doneHere are the Automator settings I am using for my “UploadToGCS” quick action.

If you are using a static site generator like Hugo, Gatsby, or Jekyll, automating image uploads to Google Cloud Storage with Python can make for a more efficient workflow. I hope you enjoyed this tutorial, and please let me know if you have any questions or suggestions!